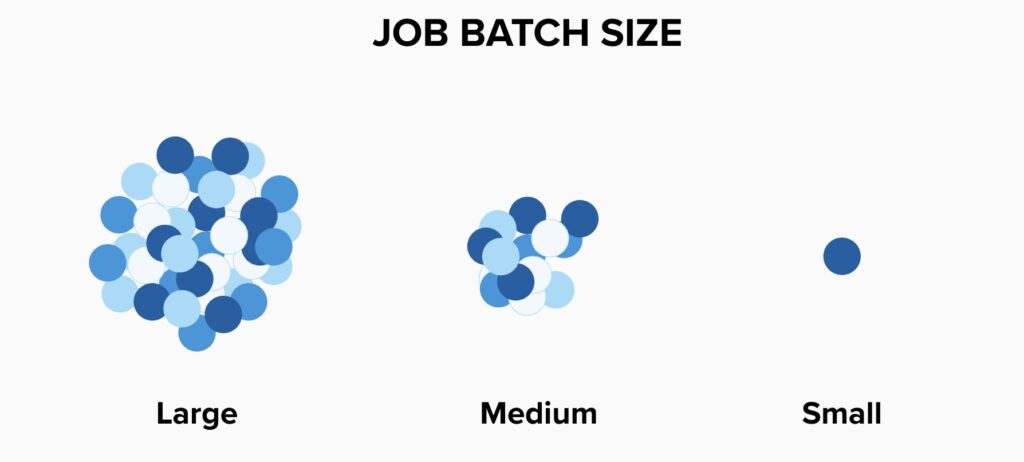

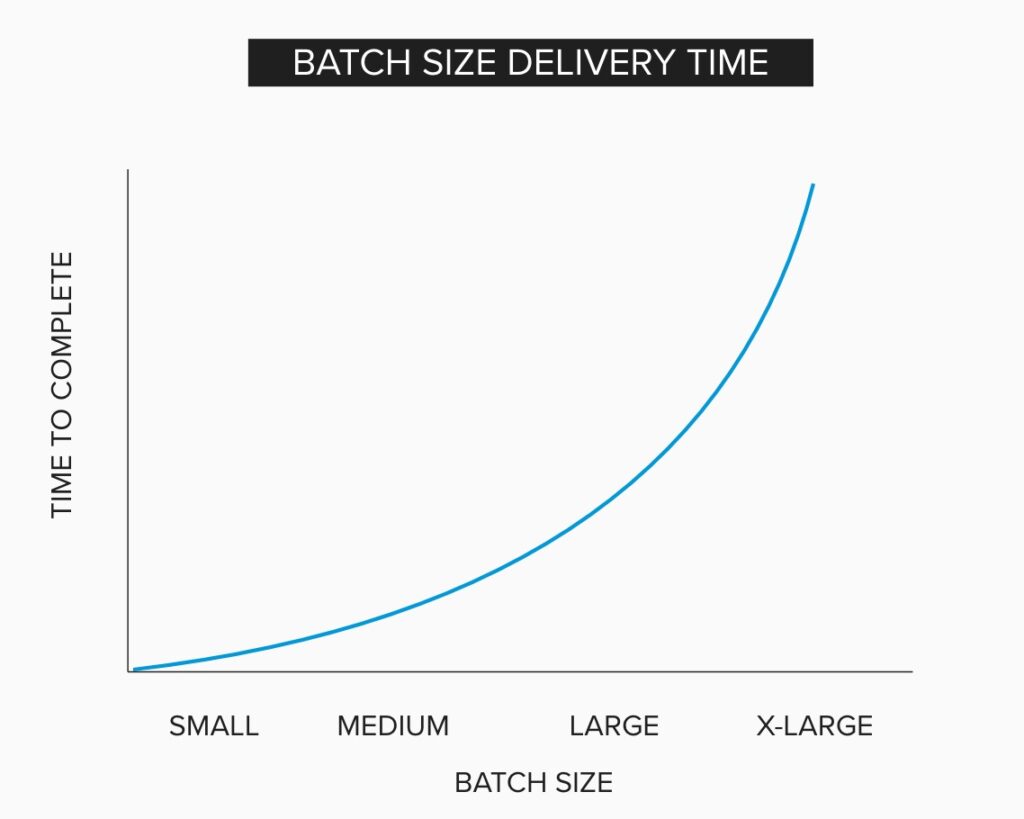

One of the lessons that we have learned from manufacturing and assembly is that the larger the size of a job, the longer it takes to process. The relationship between the size of the work item and the length of time it takes is exponential, not linear. If you want work to get done more quickly, then reducing the size of individual work items is a key to speeding things up.

To help you, I am going to give examples of large and small batches of work to make this manufacturing principle more relatable to people doing other kinds of work, explain why large batches of work are a bad idea in general, and then present a method of decomposing a large batch of work into smaller entities.

Batch Size Examples

I define a large batch of work to be any to-do, work item, assignment, task, or chore that has multiple components. Examples of large batches of work:

- Clean the house

- Plan a party

- Build a software application (or a feature with many elements)

Think about your favorite to-do list application. If you find yourself taking a to-do item and adding subtasks below it, then you have a large batch of work. Such as:

- Clean the house

- Clean the downstairs bathroom

- Vacuum the den

- Dust the furniture in the den

- Wash the dishes

- Clean the oven

- Mop the kitchen floor

- Plan a party

- Make a list of invitees

- Pick a location

- Reserve the location

- Send out invitations

- Purchase stationary

- Print invitations

- Buy stamps

- Address invitations

- Mail the invitations

- Build a software application

- Create a login screen with password security

- Create a settings screen

- Build an account screen

- Take credit card payments

As you can see, each of the three examples is composed of smaller batches of work. I use the word “batch” because that is the common term in lean literature to discuss grouped-together work items in manufacturing.

In our examples, you can see among the sub items there is another layer of sub items. If you use your imagination, you will start to see another and possibly even two more layers of sub items below those. You can see how the work is batched together.

If we set out to build a software application, clean a house, or plan a party, that is a large batch of work. In a factory, a batch might be a pallet of packages to be shipped or a stack of bricks to be fired in a kiln. A batch could also be something like a large stack of laptops on shelves in a warehouse.

For our purposes, I am defining size by the complexity of the work to be done, not the raw estimated time to complete it.

Batching Ideas is Useful

It is natural that we try chunk work together into single conceptual ideas for the purposes of goal-setting, goal-realization, and communication of ideas. It isn’t useful for us to talk about LED screen, push buttons, remote control devices, IR, WIFI radio chips, cooling systems, and power supplies when all we have to say is “a television.” Chunking concepts is how language works. It is how you are reading these words. Your mind is not assembling the letters together into words. Only small children do that learning to read. Your mind recognizes the shape of each word as a chunk of information and immediately knows what each word says. Advanced redrears recognize and predict phrases. It’s why you can still read the word redrears as readers in the previous sentence. You could read it upside-down.

The Cost of Complexity

When actually doing work, doing it in large batches causes a host of difficulties. Manufacturers in Japan learned this in the 1950’s. In the 1990’s, American manufacturing started to catch up, and in some ways is still catching up. Reducing the size of work items helps work flow. Why?

As we tie more small items together into a larger box as a single item, complexity increases. Complexity is the way that things are connected together in often unknown and therefore surprising ways. If I make a small change to a thing, and then test to see how it works, there isn’t much to test, and if there is a problem, I can easily find the problem. If I make 40 changes to a thing, and then run a test, if there is a problem, what was the cause? Complexity is a killer, and it is the main way that I define a batch to be large.

A software development project is a large batch of work. It has a single delivery date, a large batch of scope, a single budget, and it is run assuming that everything listed in the scope must be delivered together. When it is being built, there is unbelievable complexity, increasing delays in building and defects created. When it is released, debugging and finding problems is a horrific exercise. The larger the release, the more likely a company is to create a war room to manage communication and issues found, prioritize them, and expedite their resolution to prevent damage to their brand.

The effects of complexity are:

- Overhead Costs. As complexity increases, the amount of coordination overhead increases exponentially. Costs go up.

- Risk. With more things moving at once, finding the source of troubles becomes more problematic. The chance that touching one thing affects another increases. The damage we could cause with a single mistake is amplified.

- Longer Timelines. As a complex item moves through a system, it moves at a speed that is slower than the sum of the time it would take to build the individual parts. It requires more time to deliver a project with 30 requirements than 30 projects each with one requirement.

- Reduced Learning. As we are delivering more less frequently with longer timelines, it is more difficult for a person, team, or organization to learn from the experience. So many things happen in a long timeline that it can be difficult to list, discuss, and learn from them. People do not do so great trying to review 100 things that went wrong and improving from that list. At best they will remember three and succeed at perhaps one.

- Low agility. Because feedback is delayed, learning is delayed and batched up, timelines are longer, risk is increased, and overhead increased, the organization that works this way simply cannot pivot quickly without pain. When they do pivot, huge plans are thrown out, massive political cost is absorbed by individuals and divisions that fail on huge promises to powerful people, and the remainder is often not salvageable. Coming back to it later introduces old work to a new environment where it is now invalid and must be reworked from scratch.

Large batches of work are bad, so how do we decompose work into smaller batches?

Decomposing Work

Understanding the decomposition of work requires knowing what our goals are to reverse the effects of large batches:

- Shorter timelines, faster feedback, & earlier learning. I want feedback as soon as possible, on each sub-item we do, increasing the probability that we deliver the right thing in the right way.

- Reduced risk. I want to deliver work in such a way that once delivered, if it is the wrong thing, or if it is problematic, reversing what we have done or fixing it is as simple as possible.

- Low overhead. I want to work in a way that lowers the amount of coordination with others. If I can work on things one thing at a time with focus, it limits the number of people yelling at each other about their dependencies on one another.

Thus, the large batch of work “Cleaning the House” can be decomposed pretty easily into smaller elements that are still usable:

- Clean the shower

- Clean the tub

- Clean the toilet

- Clean the mirror

- Clean the counters

- Mop the bathroom floor

Since I like feedback, particularly when I am not repeating something I have done exactly the same many times, I will even break these items down and deliver them in pieces.

- Clean the shower

- Clean the showerhead.

- Clean the tile in the shower

- Clean the glass walls in the shower

- Clean the floor of the shower

Receiving feedback on each step seems like it would be more overhead and less efficient, but it is far more efficient in the long run. If I clean the entire shower, and then my customer complains the showerhead is not cleaned properly, the effort to redo that work will probably mean I have to redo the rest of the shower anyway, causing the whole batch to become waste.

I am stretching the metaphor, but only slightly.

To decompose work, use these rules:

Make it usable and therefore valuable. To break down cleaning a shower, make sure each smaller work item is something that is delivered as a finished service. A clean shower head is finished. A sketch of what it would look like finished is not finished service and not usable. A document listing all of the things to clean is also not finished service. Unfinished work produces feedback that sounds like this, “It isn’t finished. Are you going to finish it? Is that finished? When will it be finished.” Divide work into usable, finished services, features, or products. That doesn’t mean “complete” and ready for retail sale. It means finished. Think carefully about what that could mean.

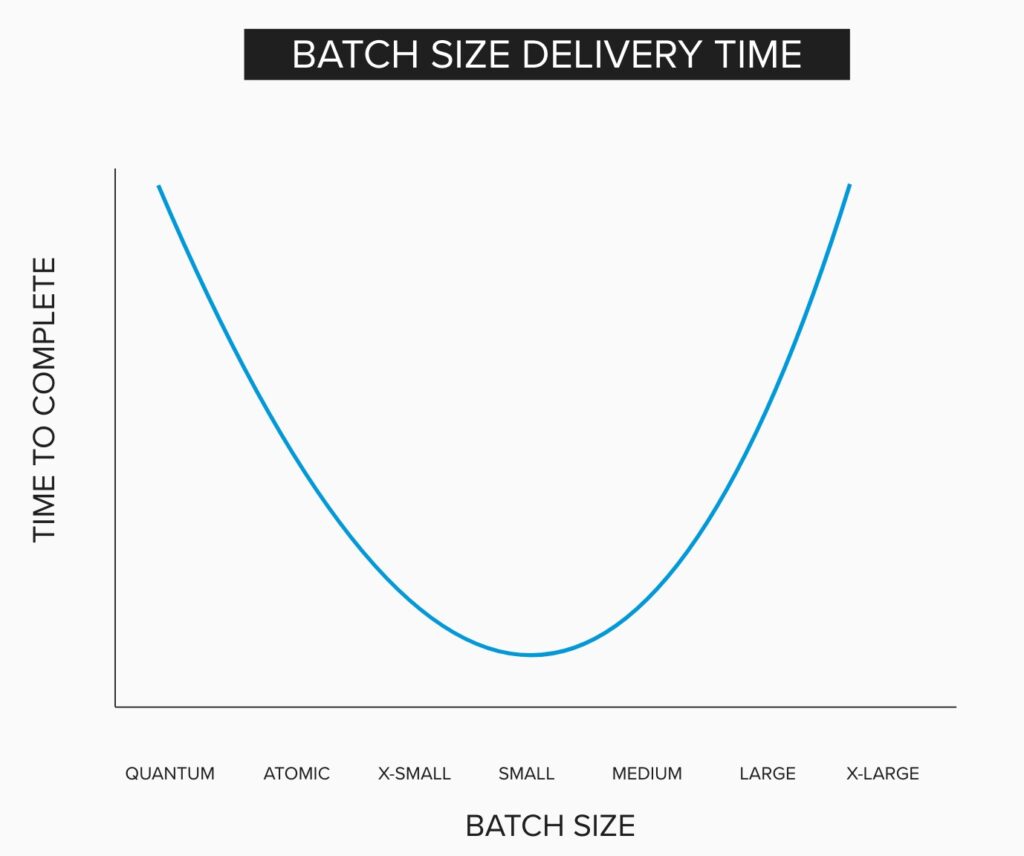

Make it small, but not too small. There is a marginal curve you can imagine where things are so big they are impossible to get done quickly or well, and then another end of the curve where work is decomposed to the quantum level causing so much overhead that it is just as bad as a large batch. For example, don’t break the showerhead cleaning down into each tiny nozzle in the device. You can easily imagine that inspecting every molecule or atom in the shower would lead to wasteful overhead. This translates to any work. There is a sweet spot for size.

Insist on customer feedback. Get feedback on finished work. Get that feedback from customers such as a friendly subset or focus group. Get the feedback directly from them and not through middlemen. Interact with them frequently to show them finished services or products. More frequently on smaller things. That’s the goal.

Science, Empiricism, and Natural Work

This is how we naturally work in some fields. It is hilarious to me explaining this concept to software developers and having them push back on the idea, because this is how all software is created. They make a small change, and then they run the code to see what happens. They never create thousands of lines of code and then execute it. That would result in a ton of debugging and hunting for problems. Programmers naturally work in small batches.

Scientists do this as well. There’s no point in setting up an experiment with no controlled variables and trying to figure out what could be causing the effects we see. They run an experiment controlling for everything they can think of, rule out a cause, and then run another experiment with a different uncontrolled variable and everything else controlled. They do this over and over, disproving hypothesis after hypothesis, ruling out explanations until the truth is what is left.

For a business, what a powerful notion this is that almost no one in Corporate America will accept! Instead of 100 requirements for a new project being run for months, why not build only a few on the cheap and get it in front of people to find out if this is a good idea first? Maybe it is not a good idea, and we should scrap the whole thing before we spend any more money. Maybe we need serious changes to our ideas first. Evidence-Based Management is most powerful when we use small batches of work to clarify our measures. If it were widely understood, most organization would drastically change their rewards systems, and few would ever run a fixed-scope project to a date again.