When organizations adopt an Agile framework or attempt to work in a more agile way, there’s often an expectation that they’ll be able to “do more with less.” While that phrase sounds appealing, it’s important to acknowledge that it often conflicts with the laws of physics—except in two notable cases: automation and the reduction of rework.

Both of these strategies enable teams to unlock additional capacity without adding headcount, but reducing rework, in particular, addresses a problem that often goes unnoticed. The time spent fixing defects or redoing work due to misaligned requirements can consume a significant portion of a team’s capacity. By eliminating wasteful rework, organizations not only recover time but also improve overall flow and product quality.

In this article, we’ll explore how software development teams can systematically reduce rework, focusing on building quality into every step of the process and leveraging automation to prevent defects before they occur. By distinguishing between the waste of fixing errors and the iterative improvement that is a natural part of agile processes, we’ll outline actionable strategies to help your team achieve more without working harder.

The Cost of Defects and Rework

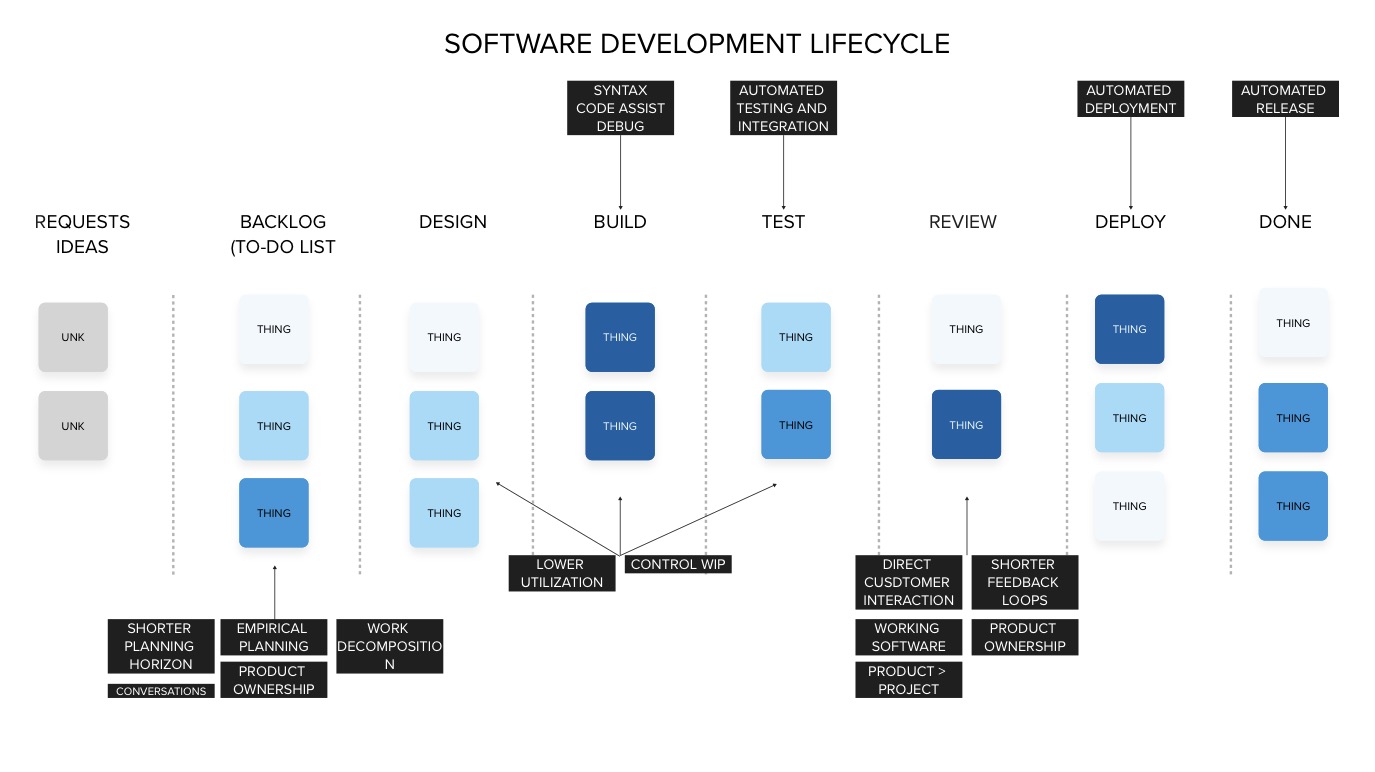

Defects are the main source of rework. In software development, we can identify multiple types of defects by looking at every step in the process. Cost escalation over time is a known effect of defects. The later they are discovered in the process, the more they cost and the more it costs to fix them.

Working backward from the most expensive defects, there are the bugs, errors, features that fail to perform as intended, and other embarrassments that happen when the product is shipped to customers and they start to complain that things do not work or contain errors. These are the most expensive kind, because they impact company reputation, anger shareholders, undermine marketing efforts, and damage the performance of the product in the marketplace. Defects can be death for a product or even a company.

Defects cost less when they are found by a limited subset of customers in a controlled environment. The smaller this subset, the less expensive the defects. Limiting initial release to a few markets seems like risk mitigation, but the smaller the exposure, the more risk is managed.

When defects are found in testing, they cause rework within a team. Code is built, testers find problems, code is sent back to developers and is built again, and then it is tested again. Every time the same code travels this path, it is using up expensive development capacity that could have been occupied by other valuable work.

When defects are found in designs, they cause rework as developers have to send designs back for rework, causing a similar loop as with testers.

Defects found in requirements and communication are often caused by hand-offs where customers ask for things, and layers of employees within the company handle the requests and interpret them before they are delivered to the developers who have no first-hand contact with the customers. The telephone game of requirements can cause developers to build, testers to successfully test, and then reviews to reveal, “That’s not what I meant.”

There are lots of statistics thrown around about how much defects cost the software industry. I am not sure that any of these estimates are accurate. But some think defects may cost trillions every year in the US economy alone.1 How accurate is that number? Who cares? Even if it is off by a large margin for better or worse, it is terrifying!

Reduce Rework

The best way to increase the capacity of any system is to remove any rework being performed. Imagine a factory producing automobiles. Any time that an automobile is pushed backward back up the assembly line, that’s rework, and it causes mayhem. The same is true for any development team. The best way to eliminate rework is to prevent defects. Defects lead to rework.

Reduce and potentially eliminate defects from escaping every step in the process. Testing does not prevent defects. Testing finds defects and prevents the embarrassment and financial cost of shipping them. Defects cannot be tested away. Rather, defects must be handled at the time of creation. Automation is a great way to catch defects early or find bugs and improve syntax up front and spend less capacity making repairs.

Empirical approaches can help with shifting defect prevention left by using iterative, incremental approaches to have customers review working product to prevent building the wrong thing. Empowered teams move decision-making to the team itself and allow for the decision-maker to participate in helping developers understand what they are being asked to build and why they are building it to further prevent defects created through large requirements and hand-offs of documentation.

It is important to distinguish between rework caused by mistakes and rework caused by customer’s experiencing finished work and having new ideas. The first is to be eliminated wherever possible. The latter is a desired state until the day comes when volatility, uncertainty, complexity, and ambiguity are no longer concerns. That day has not yet come for product development.

Automation

Automation is increasingly prevalent. Large Language Models have become increasingly used for various tasks and allowing human beings to get more done at higher quality more quickly. But there is more than this automation to consider. Much more!

Automation can help reduce potential rework, cost, and risk:

- Debugging. Programming “grammatical errors” can be found by automation far more easily than by humans.

- Regression testing. Tests to make sure that pre-existing functions were not damaged during work and still perform as expected.

- End-to-End testing. Tests to ensure that various workflows complete as expected and do not error out or give unexpected results from start to finish.

- Unit testing. Tests written to ensure the immediate work being done functions as expected.

- Performance testing ensures that the system and the built functions process as quickly as expected.

- Load testing ensures that when the maximum number of users are in the system at once, it can still perform as expected.

- Security scans. Tests to make sure that common vulnerabilities have been accounted for.

Automation can also help with some of the steps of the process itself if tooling allows for connections between communication tools and actual work being performed. However, the cost/benefit of this sort of automation has not been studied and may not prove out.

Automation Cannot Do Everything… Yet.

Manual testing remains valuable, even in the age of AI. Creative people hammering the system with unexpected behaviors can expose vulnerabilities and other problems that automation cannot.

While AI is being used today to write a lot of code, it is not clear if this is reducing defects or introducing them. Enough time has not passed. So far, attempts to eliminate human developers from writing code have met with failure and difficult recruiting efforts to replace laid-off employees.

Automation cannot help ensure that customer needs are well-understood. At least it cannot help with that yet. The best way to shift all the way left at the earliest point in the process is completely manual: The team must be given direct contact with the customer and with the person who owns the product they are developing. This facilitates quick decisions and minimizes hand-offs involved in getting ideas from concept to built product. I have seen managers try to implement tooling to somehow automate the transmission of written requirements to teams, but my observation is that today, this is a very bad idea and likely to introduce more “That’s not what I wanted!” and higher costs.

Ineffective Schemes to Increase Capacity

We have had decades to study what does not work to increase the capacity of a system. Following is a list of things many managers believe will increase capacity which have been proven time and again to not work – or even have negative effects:

- Getting started early. “This one is important, and the deadline is pretty far in the future, so let’s start work on it now.” This results in big plans up front, big requirements up front, and causes more rework. The farther the planning horizon, the more rework will be introduced. Starting things sooner makes things worse.2 Work should instead be started at the last responsible moment.

- Working on more things at once. Capacity is not increased by starting more work items at the same time. The more things are moving through any system, the longer they will spend in the system, increasing further risk of defects, and slowing down the system overall. This was proven mathematically by Dr. Little in 1961 and is now known as Little’s Law.3 This law is the basis of modern manufacturing capacity management, and it applies to any system where things step through a stream or workflow. Reduce the number of active work items which are in-process at a time (limit Work-In-Process) to make more work flow through more rapidly.

- Resource Utilization. Many managers believe that if they maximize the amount of work that everyone has, then more things will be produced more quickly. This is true when the labor force has nothing to do. Increasing the amount of work items requested will increase productivity and lower the cost of capacity. However, at a certain point, somewhere around the 50% mark, the cost of capacity is less than the cost of queueing the excess work causes, resulting in more cost. In other words, loading everyone up 100% will be about as effective as giving people almost nothing to do.4 It will magnify the impact of hand-offs and increase defects and rework, further utilizing people in an unforgiving environment. The counterintuitive thing to do, which feels wrong to many managers, is to allow people to have slack so that they are readily available when work arrives. This allows work to flow through the system effectively.

Lessons from the Factory Floor

Eliminating rework through automation to reduce defects, reducing hand-offs and delays, minimizing queues, using shorter planning cycles can increase the potential capacity of a development organization. These ideas are not invented by software developers. They come to us from factory floors using ideas which have been researched since the 1930’s.

- Forbes Technology Council. (2023, December 26). Costly code: The price of software errors. Forbes. Retrieved from https://www.forbes.com/councils/forbestechcouncil/2023/12/26/costly-code-the-price-of-software-errors/ ↩︎

- Reinertsen, D. G. (2009). Lean product and process development (2nd ed.). Celeritas Publishing. ↩︎

- Little, J. D. C. (1961). A proof for the queuing formula: L=λW. Operations Research, 9(3), 383–387. doi:10.1287/opre.9.3.383 ↩︎

- Reinertsen, D. G. (2009). Lean product and process development (2nd ed., p. 67-69). Celeritas Publishing. ↩︎

Goldratt, E. M., & Cox, J. (1984). The goal: A process of ongoing improvement. North River Press.